HCI + AI Researcher

-

Execution Humans and LLMs now collaborate on open-ended complex tasks. How can we make interactions communicative to complement their strengths? -

Evaluation LLM outputs (e.g., reports, series of actions) are long and hard to evaluate. How can users oversight during the process when misalignments and threats are subtle?

Featured Projects

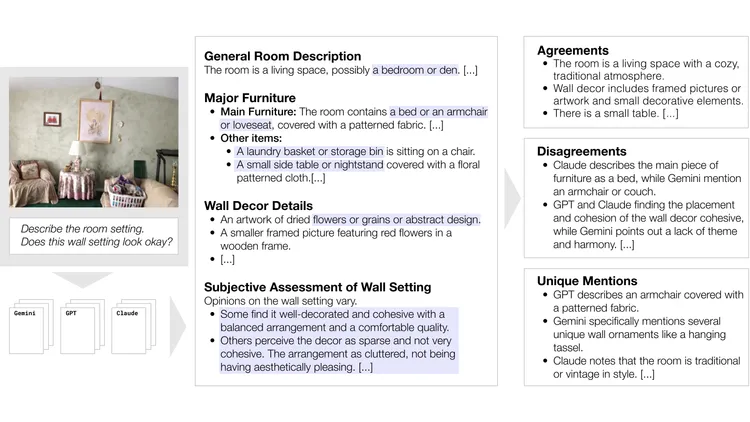

Surfacing Variations to Calibrate Perceived Reliability of MLLM-Generated Image Descriptions

Meng Chen, Akhil Iyer, Amy Pavel

ASSETS 2025

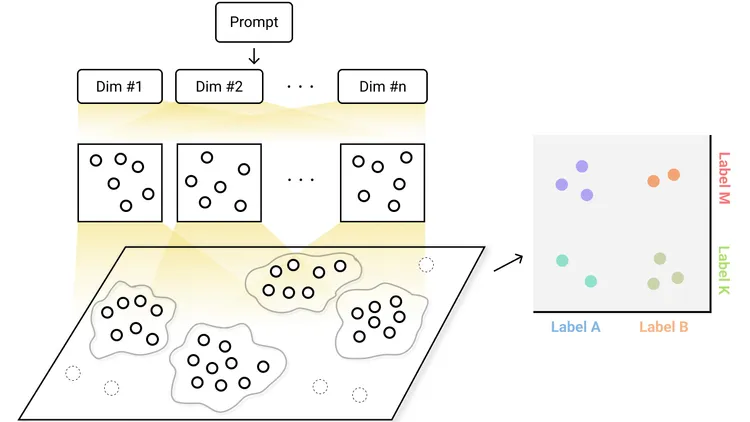

Luminate: Structured Generation and Exploration of Design Space with Large Language Models for Human-AI Co-Creation

Sangho Suh*, Meng Chen*, Bryan Min, Toby Jia-Jun Li, Haijun Xia

CHI 2024

News

- Oct 26 - 29, 2025

Presented Surfacing Variations to Calibrate Perceived Reliability of MLLM-Generated Image Descriptions at ASSETS 2025 in Denver, CO, USA. - Sep 28 - Oct 1, 2025

Presented a poster for TaskArtisan at UIST 2025 in Busan, South Korea. - Jan 2, 2025

Our paper Lotus: Creating Short Videos From Long Videos With Abstractive and Extractive Summarization is accepted to IUI 2025!